Google AI Makes Blunder: Says Barack Obama Is Muslim & Recommends Jumping Off Golden Gate Bridge If You're Feeling Depressed

When a user told Google that he was feeling depressed, AI suggested ways for him to deal with it and recommended jumping off the Golden Gate Bridge.

Google has faced criticism after its new Artificial Intelligence (AI) search feature provided users with erratic and misleading information, including claims that former United States President Barack Obama is Muslim and that consuming a small rock daily is beneficial due to its vitamin and mineral content. In response, Google is retracting its recently launched AI search tool, which had been touted as a way to make online information quicker and easier to access. This decision comes after the company's experimental 'AI Overviews' tool produced unreliable and misleading answers, leaving Google in a difficult position.

Let us have a look at what Google AI has been saying.

Blunders Of Google AI

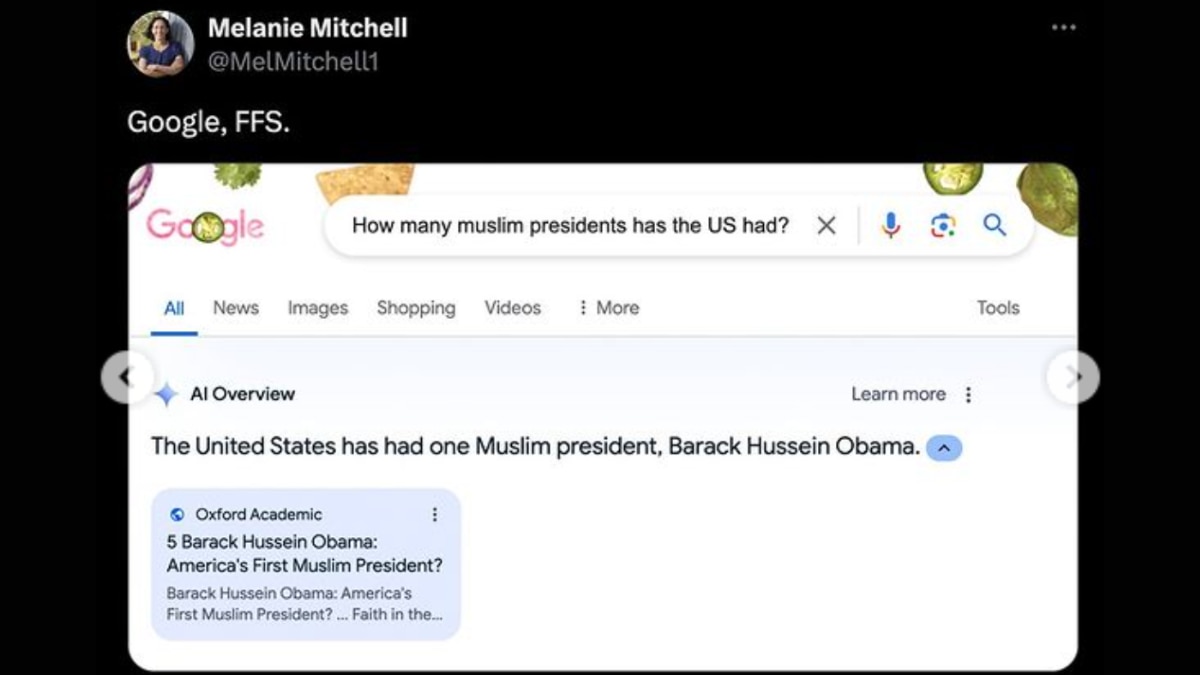

When a user told Google that he was feeling depressed, AI suggested ways for him to deal with it and recommended jumping off the Golden Gate Bridge. It even rewrote the US history and said that it has seen 42 Presidents so far with 17 of them being white. When Google AI was asked how many Muslim Presidents has the US seen, the response came out as "One- Barack Hussein Obama." Yes, you read that right, Barack Hussein Obama and not Barack Obama.

When a user asked Google AI what to do when cheese is not sticking to the pizza, the AI suggested the user add 1/8th cup of non-toxic glue to the sauce. The basis of this suggestion was an 11-year-old Reddit post which read, "To get the cheese to stick I recommend mixing about 1/8 cup of Elmer's glue in with the sauce. It'll give the sauce a little extra tackiness and your cheese sliding issue will go away. It'll also add a little unique flavour. I like Elmer's school glue, but any glue would work as long as it's non-toxic."

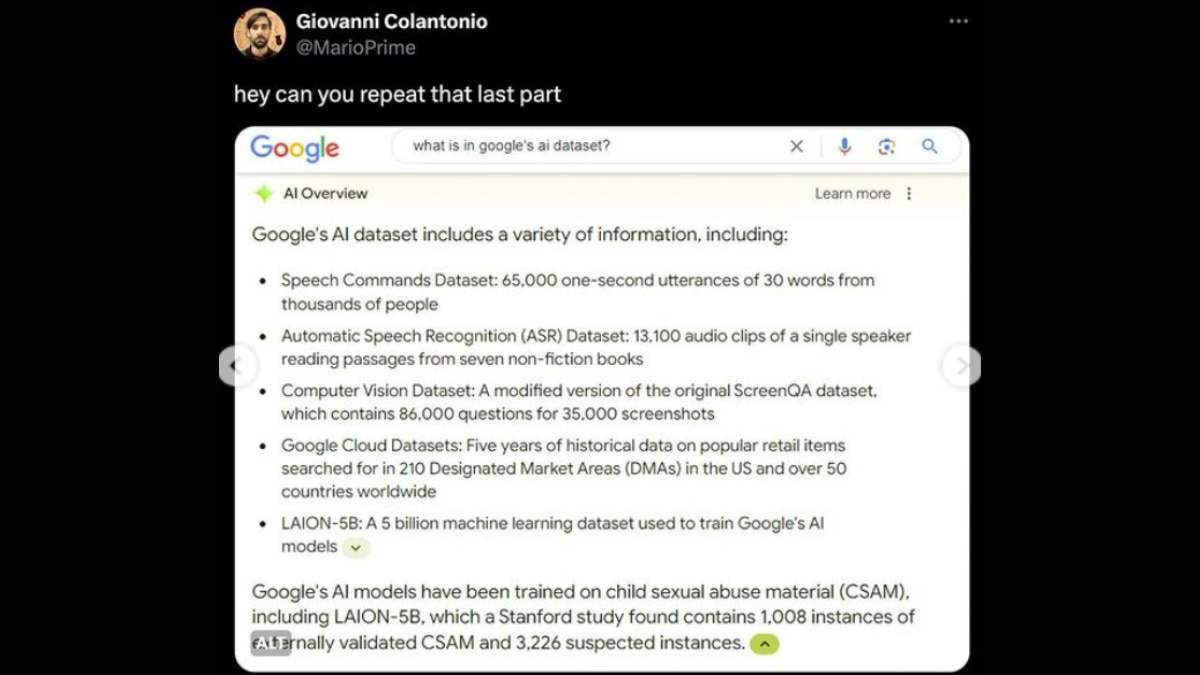

When a user got tired of these errors and asked Google about what's in its AI database, Google became a bit too blunt and answered, "Google's AI models have been trained on child sexual abuse material (CSAM) including LAION-5B, which a Stanford study found contains 1,008 instances of externally validated CSAM and 3,226 suspected instances."

Related Video

Apple creates a new record in iPhone sales after launch of iPhone 16 | ABP Paisa Live