GPT-4 Is Finally Here: Here's How It Can Change ChatGPT As We Know It

While GPT-3.5-powered ChatGPT can only handle text inputs, GPT-4 will enable image inputs as well.

GPT-4, the successor to GPT-3.5 (the large language model that powers ChatGPT), has finally been unveiled by Microsoft-backed research lab OpenAI. Ever since ChatGPT was released as a prototype last year, the chatbot has been taking over the world by storm with its ability to quickly generate a variety of responses — ranging from writing high-school essays to creating complex codes for programmers — in a surprisingly human-like manner. Now, with the arrival of the way more capable GPT-4, it appears that the way we use ChatGPT might change soon, marked by GPT-4's ability to process both text and image inputs.

OpenAI in a blog post on Tuesday hailed GPT-4 as the next major milestone in its effort to scale up deep learning. "We’ve spent six months iteratively aligning GPT-4 using lessons from our adversarial testing program as well as ChatGPT, resulting in our best-ever results (though far from perfect) on factuality, steerability, and refusing to go outside of guardrails," the firm wrote.

Is GPT-4 Truly More Capable?

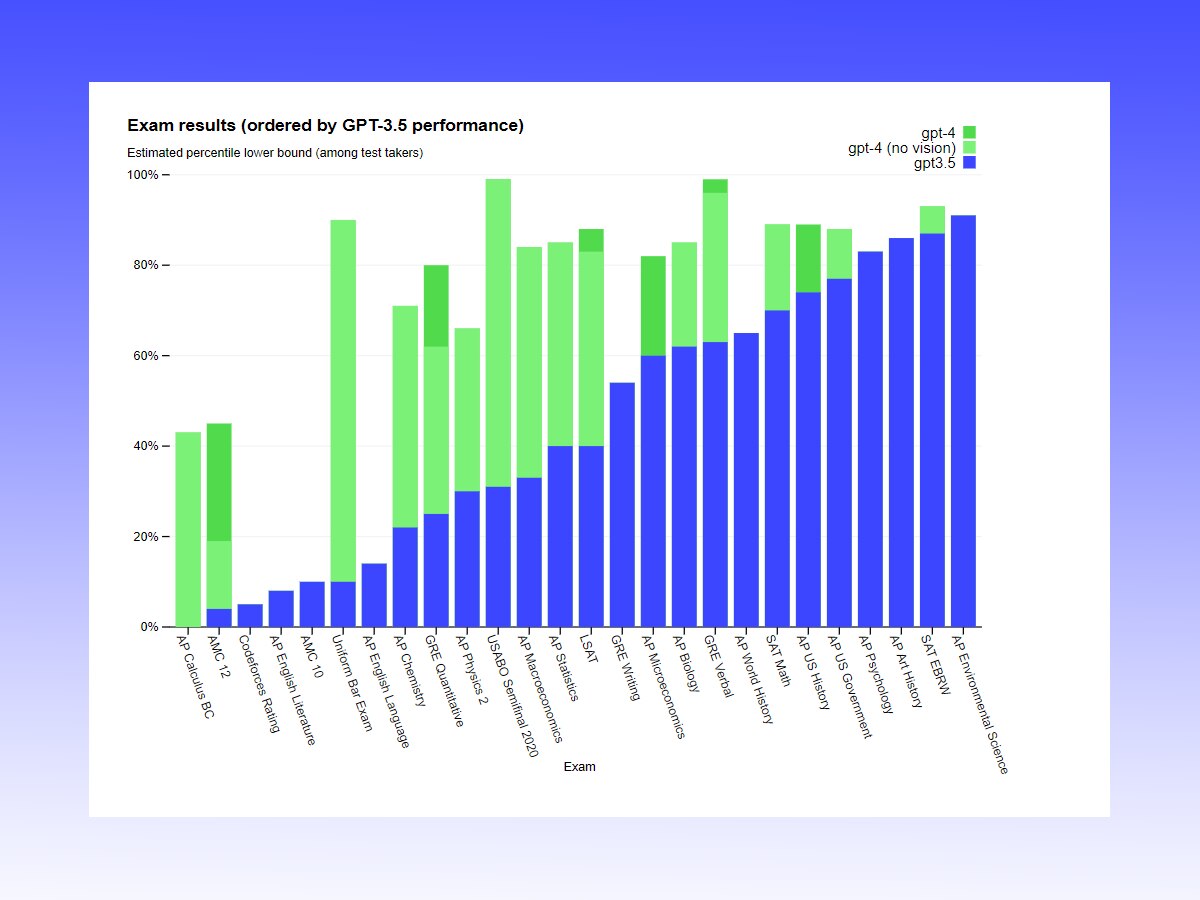

OpenAI tested both GPT-4 and GPT-3.5 through a series of publicly available tests (screenshot below), where the prowess of the new multimodal large language model is clearly visible.

OpenAI claimed that while GPT-3.5 managed to rank in the bottom 10 per cent in a simulated bar exam, GPT-4 managed to land in the top 10 per cent.

"In a casual conversation, the distinction between GPT-3.5 and GPT-4 can be subtle. The difference comes out when the complexity of the task reaches a sufficient threshold — GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5," OpenAI wrote in the blog post. "In the 24 of 26 languages tested, GPT-4 outperforms the English-language performance of GPT-3.5 and other LLMs (Chinchilla, PaLM), including for low-resource languages such as Latvian, Welsh, and Swahili."

How Will GPT-4 Handle Image Inputs?

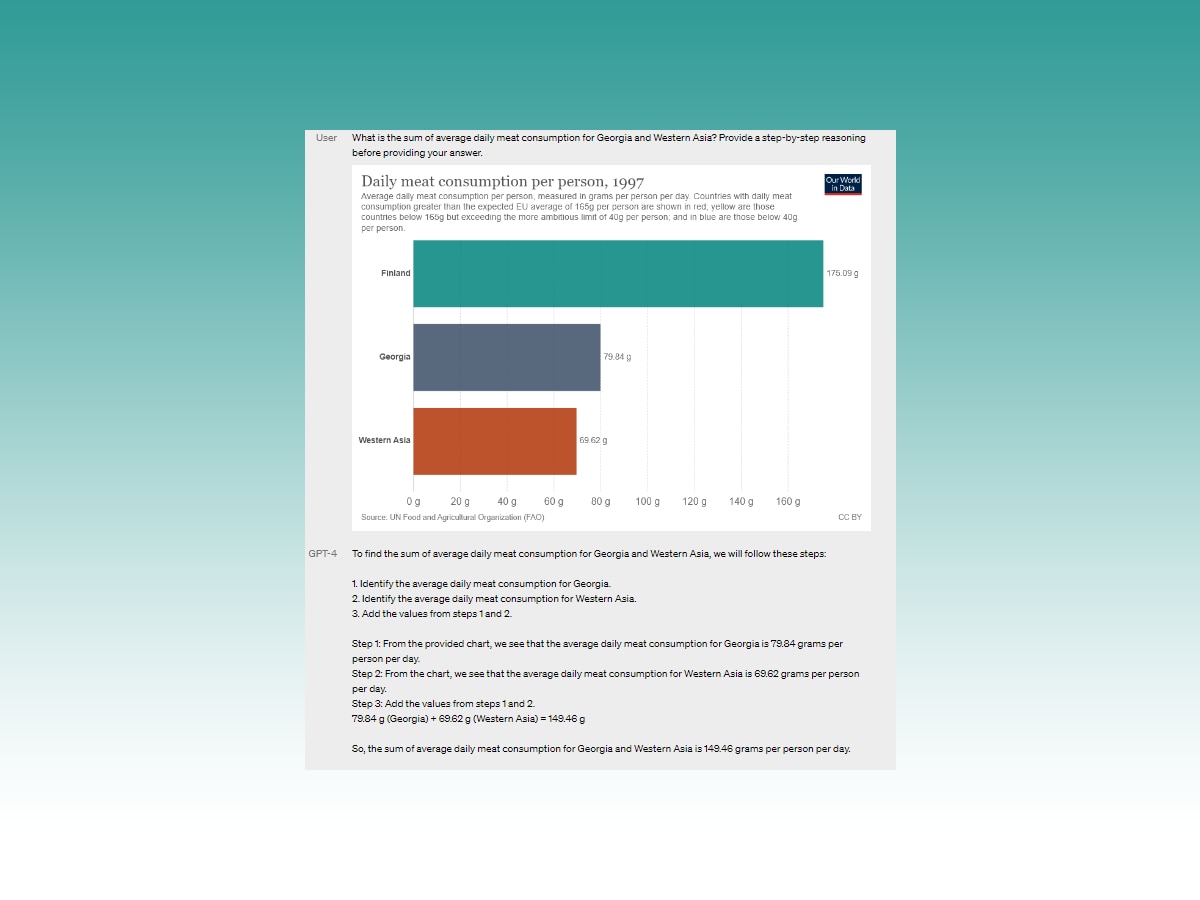

As per OpenAI, GPT-4 is capable of processing image inputs similar to handling text inputs. It can scan and read images and offer responses to user queries as needed. It can read graphs (screenshot below), scan images for notable points, and more.

What Are The Limitations Of GPT-4?

As per OpenAI, GPT-4 has the same limitations as GPT-3.5 and is still not "full reliable." "Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of a specific use-case," the company said.

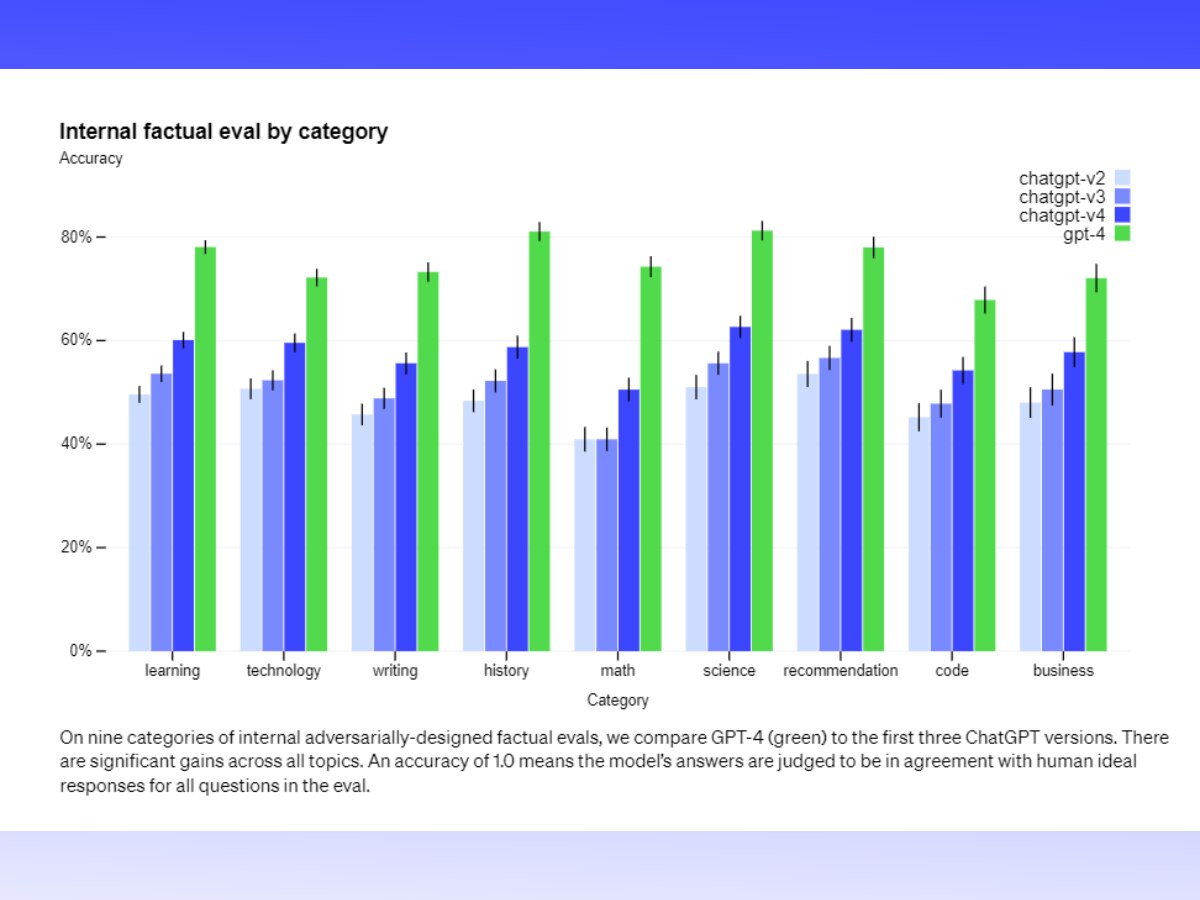

However, OpenAI also claimed that GPT-4 can significantly reduce hallucinations seen in previous models. "GPT-4 scores 40 per cent higher than our latest GPT-3.5 on our adversarial factuality evaluations," OpenAI said.

Will GPT-4 Make ChatGPT More Capable?

The simple answer is: Definitely!

We all saw how ChatGPT brought back the global focus on AI and its various tools and implementations. The GPT-3.5-powered chatbot has already shown its ability to process a wide range of commands and functions. With the increased capability and learning skill of GPT-4, it's no surprise that if the new large language model does get implemented in ChatGPT, it will bring in new ways for individuals and organisations to put it to good use.

With GPT-4, you can now upload graphs and ask it to analyse it for you and generate pointers; you can upload images and ask it to spot aberrations or unique points in them, and also, you can upload an image and ask it to write an essay on the image. It should be noted that once GPT-4 is out for public use, more use cases will emerge slowly, as we saw in the case of ChatGPT.

OpenAI itself has been using GPT-4 in its internal processes and has seen a notable impact on a range of functions, including the likes of content moderation, sales, and programming.

How To Access GPT-4?

For now, GPT-4 will be available to ChatGPT Plus subscribers (priced at $20 per month) with a usage cap. OpenAI confirmed that the cap will be adjusted "depending on demand and system performance in practice, but we expect to be severely capacity constrained."

OpenAI also added that based on traffic patterns, the company may announce a new subscription plan for "higher-volume GPT-4 usage."

Free access to GPT-4, with a certain cap, will be also made available soon for all users.