Did AI Misinformation Impact Elections In 2024? A Look At US, Europe, UK, India Polls

Fears of widespread AI misinformation ran rife in this historic election year. Although broadly unfounded, deepfakes and AI "slop" did taint some races. Logically Facts examines the global picture.

Fears of AI-generated misinformation ran rife ahead of this historic election year. With more than half of the global population expected to head to the polls, including voters in the U.S., U.K., India, Pakistan, and Bangladesh, media outlets and experts warned deepfakes, fake audios, and other AI-manipulated content might deceive and sway voters. Many expected an "AI armageddon."

But this did not materialize. AI misinformation proved far less widespread than expected, making up less than one percent of fact-checked content on Meta platforms and just 1.35 percent of Logically Facts' total of 1,695 fact-checks. As the election year ends, Logically Facts assesses the pertinent question: how did AI influence voters worldwide, if at all?

AI amplified existing partisan sentiment in the U.S.

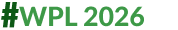

In the lead-up to the U.S. presidential election, AI-generated images were prevalent, with Democratic candidate Kamala Harris in particular targeted by fabricated visuals. Notable examples included AI-manipulated photos depicting Harris alongside controversial figures like Jeffrey Epstein and Diddy and AI-generated claims alleging Harris's history as a sex worker. Though some posts leaned towards satire or dark humor rather than attempting to deceive, they reflected the underlying misogyny Harris was subjected to throughout the campaign.

As such, AI tools were used less for outright misinformation and more as a means to ridicule and amplify partisan sentiment, entrenching already existing beliefs. "AI makes it easier to create these illustrations, but I don't see it as a game changer, and it won't sway voters," Dr. Sacha Altay, a postdoctoral research fellow at the University of Zurich's Digital Democracy Lab, told Logically Facts.

"I've seen much more AI slop, AI jokes, AI art, and AI porn than genuine AI-driven election misinformation," Altay continued. "Many of these are problematic, especially the non-consensual porn, but most are ultimately harmless." These AI-generated images were often shared by accounts known for regularly spreading misinformation, signaling that rather than intending to sway committed voters, these depictions of Harris largely reinforced already existing biases.

Why do some people fall for these often poorly executed visuals? "The biggest stumbling block for people is their own biases and emotions," Alex Mahadevan, director of MediaWise, a digital media literacy initiative, told Logically Facts. "It's easy to fall for poorly made AI images if you're angry or vindicated."

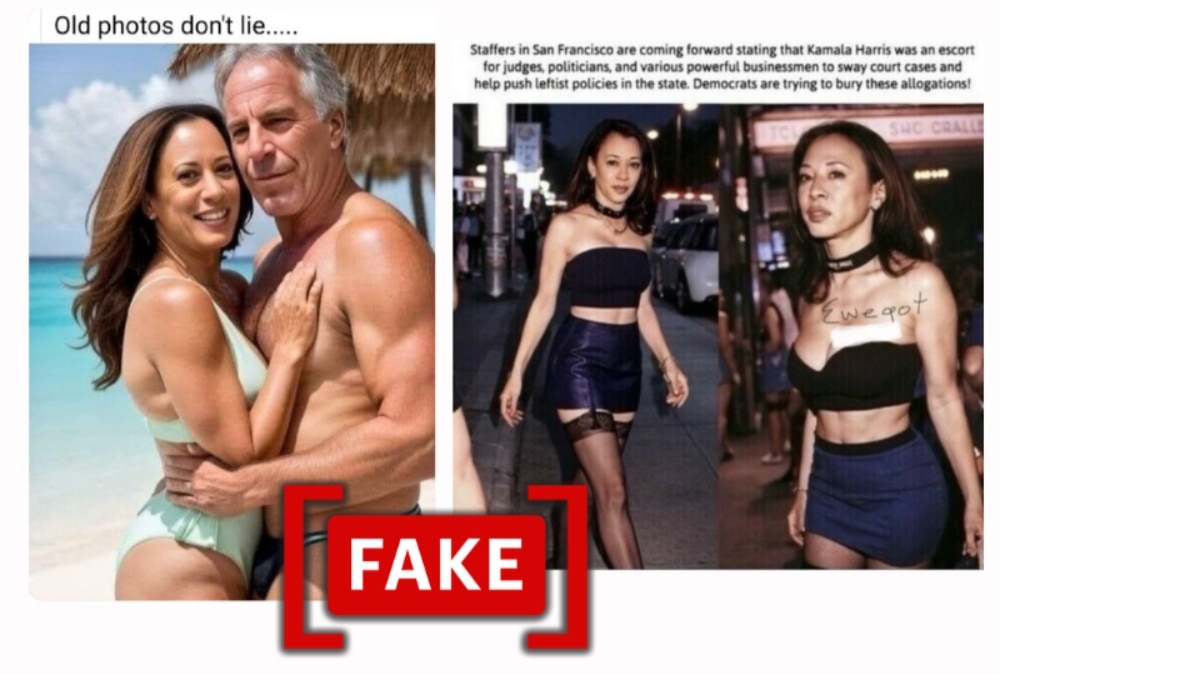

Fake celebrity endorsements emerged as another prolific strain of AI-generated misinformation during the run-up to the election. One example was amplified by Donald Trump, who reposted a series of AI-generated images portraying Taylor Swift fans wearing "Swifties for Trump" t-shirts. However, a month later, Taylor Swift publicly endorsed Kamala Harris. According to a YouGov poll, 53 percent of surveyed Americans believed Swift's endorsement of Harris could help her campaign. While measuring the electoral impact of individual AI-generated media or narratives is difficult, polls during the Harris-Trump race showed no significant shifts or sudden drops in support for either candidate, suggesting limited influence.

Deepfakes, the cause of most AI-related concerns leading up to the election year, proved less prevalent than expected. The most high-profile deepfake was Joe Biden's "robocalls" in February 2024, aimed at dissuading voters from participating in the New Hampshire Democratic primary. This was quickly debunked. Speculation about an "October surprise" involving a convincing deepfake circulated in the media, but these fears did not materialize.

Although meager, AI misinformation wasn't just domestic—evidence also points to some foreign interference. Logically Facts debunked an AI-manipulated video of a supposed former aide to Arizona Secretary of State Adrian Fontes, falsely alleging government plans to rig the election for Kamala Harris. The video was traced to a group linked to former Wagner leader Yevgeny Prigozhin. Meanwhile, the U.S. government seized 32 internet domains used in Russian government-directed influence campaigns, which relied on AI-generated content, influencers, and advertisements to undermine Harris' campaign and boost Donald Trump. In an election security update 45 days before the election, the U.S. government stated that Russia had produced the most AI content related to the presidential race among foreign actors.

Some social media companies took proactive steps to limit the spread of deepfakes, with Meta among them. In the month leading up to election day, Meta blocked 590,000 attempts to use its Imagine AI generator for deepfakes targeting figures such as Donald Trump, J.D. Vance, Kamala Harris, Tim Walz, and Joe Biden. OpenAI also said it had disrupted 20 influence operations and deceptive networks that attempted to use its models globally since the beginning of the year.

European and U.K. elections saw little meaningful AI misinformation

In Europe, the EU vigorously prepared for June's European Parliament elections, anticipating an "AI armageddon." Yet again, the actual impact of AI misinformation was minimal—a Centre for Emerging Technology and Security (CETaS) report identified just 11 viral cases in the EP and French elections combined.

In August 2024, two months after the election, the European Union's AI Act—the first comprehensive legal framework for artificial intelligence—officially took effect. Emmie Hine, a Research Associate at the Yale Digital Ethics Center, told Logically Facts that this legislation may have incentivized platforms to take rapid action on AI misinformation.

"While the AI Act didn't explicitly prohibit the use of AI in elections, it emphasized the responsibility of platforms to combat election-related misinformation and disinformation," Hines said.

Similarly, the July 4 general election in the United Kingdom demonstrated a limited influence of AI-driven misinformation. Traditional forms of misinformation, such as spreading unsubstantiated rumorsor fabricating fake articles, dominated instead. According to the same report by CETaS, only 16 confirmed instances of AI-generated disinformation or deepfakes went viral during the election period. Logically Facts debunked nine false claims throughout the campaign, with just three involving verifiable AI-generated content, focusing on key voter issues like immigration and religious tensions. Similarly to AI-generated misinformation in the U.S., AI was used to visually communicate the sentiment of some voters across the U.K., not necessarily to mislead others.

Indian enforcement authorities fail to take action on electoral AI

India's seven-phase general election, running from April 19 to June 1, saw the country of 1.4 billion people with 820 million active internet users go to the polls - with concerns about AI disinformationimpacting the elections running rife.

During the campaign, AI was used in many ways, including real-time translations, AI-powered hyper-personalized avatars, and speeches by deceased leaders. However, in a report by the Washington Postfrom April 2024, Divendra Singh Jadoun, a prominent AI deepfake creator in the country, has said that more than half of the requests he received by political parties for AI content were "unethical". This included fake audios of opponents making gaffes on the campaign trail, pornographic images of opponents, and some campaigns requesting low-quality fake videos of their own candidates to cast doubts on real videos of these candidates with negative consequences.

This maelstrom operated with little to no intervention from the Election Commission of India (ECI), the autonomous constitutional authority that monitors and conducts elections. The ECI sent a letter to political parties warning against the use of misleading AI content and urging parties to take posts down within three hours if they are found to be AI. However, the body failed to bring in any specific regulations against misleading deepfakes. A report by an Indian digital rights body, the Internet Freedom Foundation, noted that action against political actors for violating the electoral code of conduct was "delayed and inadequate."

The Delhi High Court has also expressed its concerns about AI this year while hearing two public interest cases about the non-regulation of AI. In October 2024, the court observed that with India's population, it will face problems with AI in the absence of legislation or regulation. It has since askedthe central government to create a panel to examine the regulation of deepfakes.

Prateek Waghre, a tech policy researcher, says that AI is "another layer" to an already "extremely problematic information ecosystem" in the country that will only be exacerbated with time. According to him, enacting new laws when existing systems are already weak and subject to misuse by political powers is not the way forward. "Where legislation has come in, anti-disinformation and anti-fake news legislation, they have often been used to target political opponents or dissidents or activists or journalists," Waghre told Logically Facts.

He points to an example from the recently concluded Maharashtra state elections in November 2024, where the ruling Bharatiya Janata Party shared a fake AI audio of political opponent Supriya Sule discussing misusing funds from a cryptocurrency fraud to influence the state elections. This went viral before being debunked, but the ECI has taken no action as of December 9.

The South Asian situation: How elections went for India's neighbors

Pakistan and Bangladesh both saw general elections this year amid a volatile political climate - Pakistan's former Prime Minister Imran Khan was arrested in August 2023, engendering widespread protests, while Bangladesh's former Prime Minister Sheikh Hasina was ousted after violent protests in August of this year.

In the case of these two countries, local fact-checkers told Logically Facts that AI disinformation, while not a major issue, was used for negative campaigning purposes.

Sameen Aziz, a senior fact-checker and producer at Soch Media, Pakistan's only IFCN signatory, told Logically Facts that the major narratives centered on deepfakes attributed to political representatives, especially calling for election boycotts just before voting. The biggest challenge with these videos was their rapid dissemination and the lack of tools to combat them.

Screenshots of viral AI-generated claims from the Pakistan elections of PTI leaders, Basharat Raja (left) and Imran Khan (right), announcing election boycotts. (Source: X/Modified by Logically Facts)

"Detecting altered audio required specialized tools and expertise that were not always readily accessible; the same thing also happened with deepfakes," Aziz said. "Fact-checkers also encountered resistance from partisans who dismissed corrections as biased."

The lack of reliable technology also presented issues in Bangladesh, according to Sajjad Chowdhury, the Operations Lead and Senior fact-checker at RumorScanner. "One of the most significant challenges involved the claims of leaked phone records of political and administrative figures," Chowdhury told Logically Facts. "Due to the absence of reliable technology for audio detection, verifying these claims was not possible."

Aziz is also concerned that amended legislation aiming to combat online misinformation could limit individual freedoms if misused by the government. "Balancing regulation with freedom of expression will be critical in navigating this complex issue," she said.

AI misinformation: an ever-present threat

Ultimately, the disinformation apocalypse with an AI harbinger did not come to pass in 2024's general elections. Traditional methods of disinformation were still preferred by most actors and made up the mass of disinformation on platforms. However, AI disinformation remains a threat in countries without the framework in place to protect the common voter from manipulation.

While regulation and education could enhance information quality on social media, Altay emphasizes that "the problem lies more with the actors than the technology." AI is simply another tool to illustrate existing misconceptions, not the source of misinformation—although Mahadevan worries this could change as technology advances. "I am worried that this tech will allow bad actors to target individuals more precisely with AI-generated content tailored to their hopes and fears," he said.

Altay, however, has higher hopes. "Instead of focusing exclusively on the potential negative effects of AI, I think we should find ways to use AI to promote the quality and diversity of public discourse," he told Logically Facts.

This report first appeared on logicallyfacts.com, and has been republished on ABP Live as part of a special arrangement. Apart from the headline, no changes have been made in the report by ABP Live.

Related Video

Exclusive: Akhilesh Jumps Barricades, Leads Opposition March To EC Amid Vote Looting Allegations